Leveraging open data to tackle cyber disinformation

Innovative methods to spread disinformation require innovative methods to counter it

In today’s increasingly digital world, protecting the EU’s cyber realm is becoming more and more important to safeguard digital innovation from threats and vulnerabilities. One of the contemporary challenges identified by ENISA in its annual Threat Landscape report (2022) on the state of cybersecurity is the spread of disinformation. The report gives examples on how disinformation online (also called cyber disinformation) poses a challenge to democracy and public debate and it also mentions that the distribution of disinformation can be connected to cyberattacks.

In this data story, we harness open data to understand the public perception towards disinformation and demonstrate the potential of open data as a powerful tool to combat disinformation. First, we explore the definition of disinformation. Then we use open data to understand the risks posed by disinformation. Next, we look at how advanced technologies contribute to online disinformation. Lastly, we see how technology tools powered by open data can offer a response to disinformation.

Cyber disinformation in a nutshell

In the 2018 European Commission communication ‘Tackling online disinformation: a European approach’, disinformation is defined as ‘false or misleading content that is spread with an intention to deceive or secure economic or political gain, and which may cause public harm’. Of course, people may accidentally share inaccurate information (called misinformation). Disinformation is distinguished from general inaccurate information in that it is done deliberately with a conscious intent, and, in certain circumstances, it can pose a cyber threat.

Some of these threats come as disinformation campaigns that often use digital channels like social media, email, and websites to disseminate false information. These campaigns can be a means for various types of cyberattacks, such as deceptive online identity theft attempts (phishing attacks) or the malicious spreading of harmful software (malware distribution). Technological advancements in AI and generative AI have also introduced innovative methods to spread disinformation online and breach social networks. In response, innovative methods such as tools that leverage open data have been developed to fight the spread of disinformation.

Citizens’ perceptions about the risks of disinformation

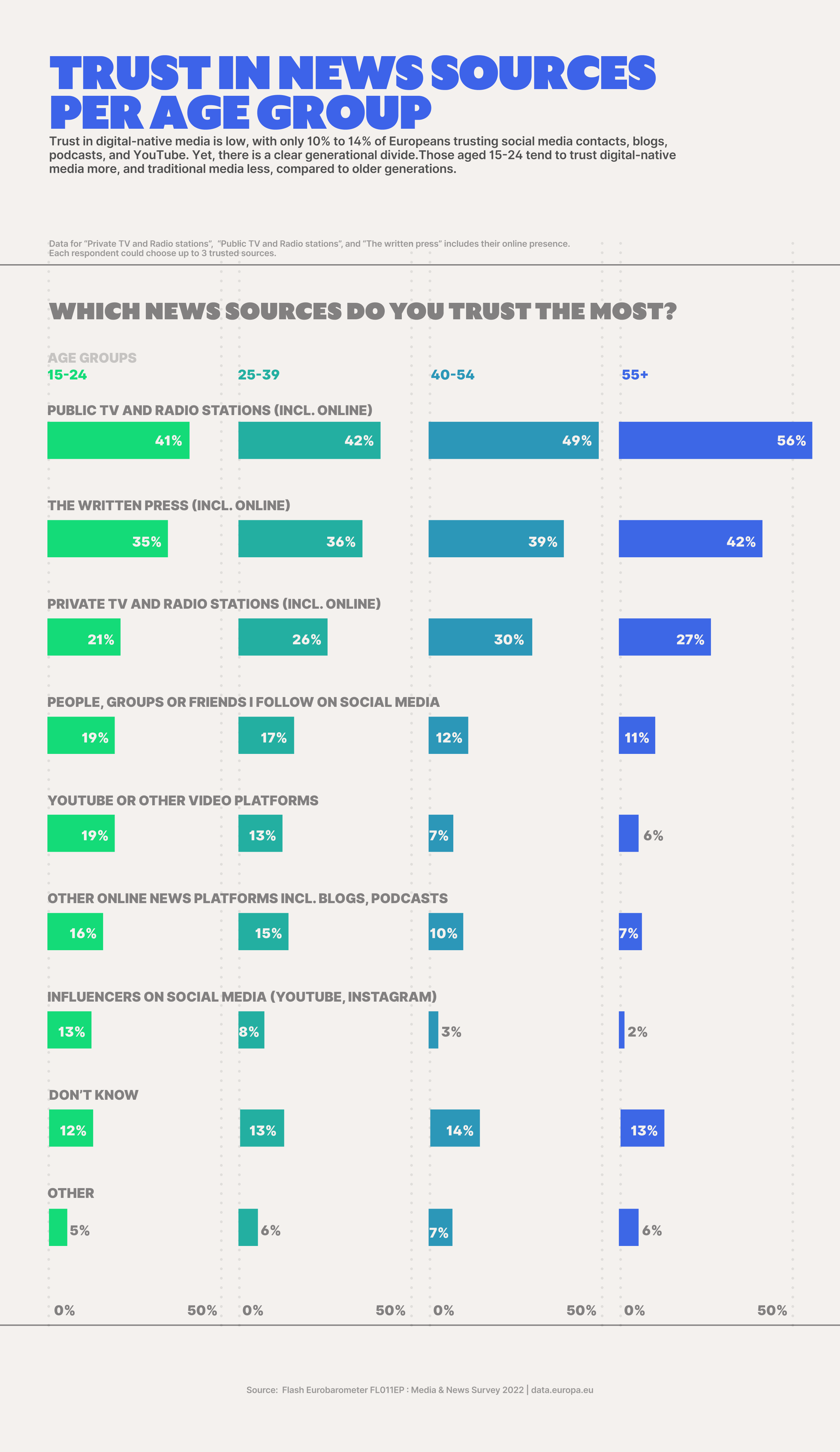

We can use open data to understand the risks of disinformation and its impact on our society. As one example of an impact on society, research by the European Foundation for the Improvement of Living and Working Conditions (Eurofound), an EU agency, reported that trust in the news media in the EU has been declining. This finding is supported by a Eurobarometer on Media and News (2022) which showed a sense of doubt among surveyed respondents when it comes to online media outlets. Notably, traditional broadcast and print media (and the online accounts of these traditional media outlets) rank highest on average as trusted news sources (49 % and 39 %, respectively). This holds across age groups as we can see in Figure 1. For example, both younger respondents aged 15 to 24 (41 %) and older respondents aged 55 and above (56 %) report high trust in traditional broadcast media, although there is a 15 percentage point difference in the levels of trust reported by these two age groups.

Figure 1: Trusted news sources in the EU-27 by age group

Source: Eurobarometer Media & News Survey 2022

For online news platforms and social media channels, on average, one in seven respondents (14 %) report that they trust people, groups or friends they follow on social media to give them truthful news. Moreover, 11 % trust online news platforms such as blogs and podcasts, 10 % trust YouTube and other video platforms, and 5 % trust influencers on social media. There are also generational differences in these reports, with younger citizens typically trusting online news platforms and social media channels more than older citizens do (see Figure 1).

Furthermore, the survey highlights a shift in news consumption habits among young viewers aged 15 to 24. Younger respondents are much more likely to use social media platforms and blogs (46 % of 15- to 24-year-olds compared to 15 % of respondents aged 55 and older) as well as YouTube and other video platforms (34 % v 8 %, respectively).

In addition to a difference between age groups, there is also a difference over time in which news sources are most trusted. Notably, there has been a decline in the use of traditional news websites among young viewers, with a 9 % decrease observed since 2018. This shift in preference towards social media could indicate a changing media landscape where younger generations are more reliant on user-generated content and social networks for their information. Indeed, Eurofound identified that social media can be a potential source of unverified news content and louder, less nuanced perspectives.

Even though many citizens do not consider online media outlets as reliable sources of information, most respondents to the Eurobarometer on News and Media expressed some level of confidence in their ability to recognise disinformation. Specifically, 12 % of respondents reported feeling highly confident and an additional 52 % reported feeling somewhat confident in their ability to recognise disinformation. This suggests that while trust in online media may be low, many individuals believe they have the skills to discern accurate information from disinformation.

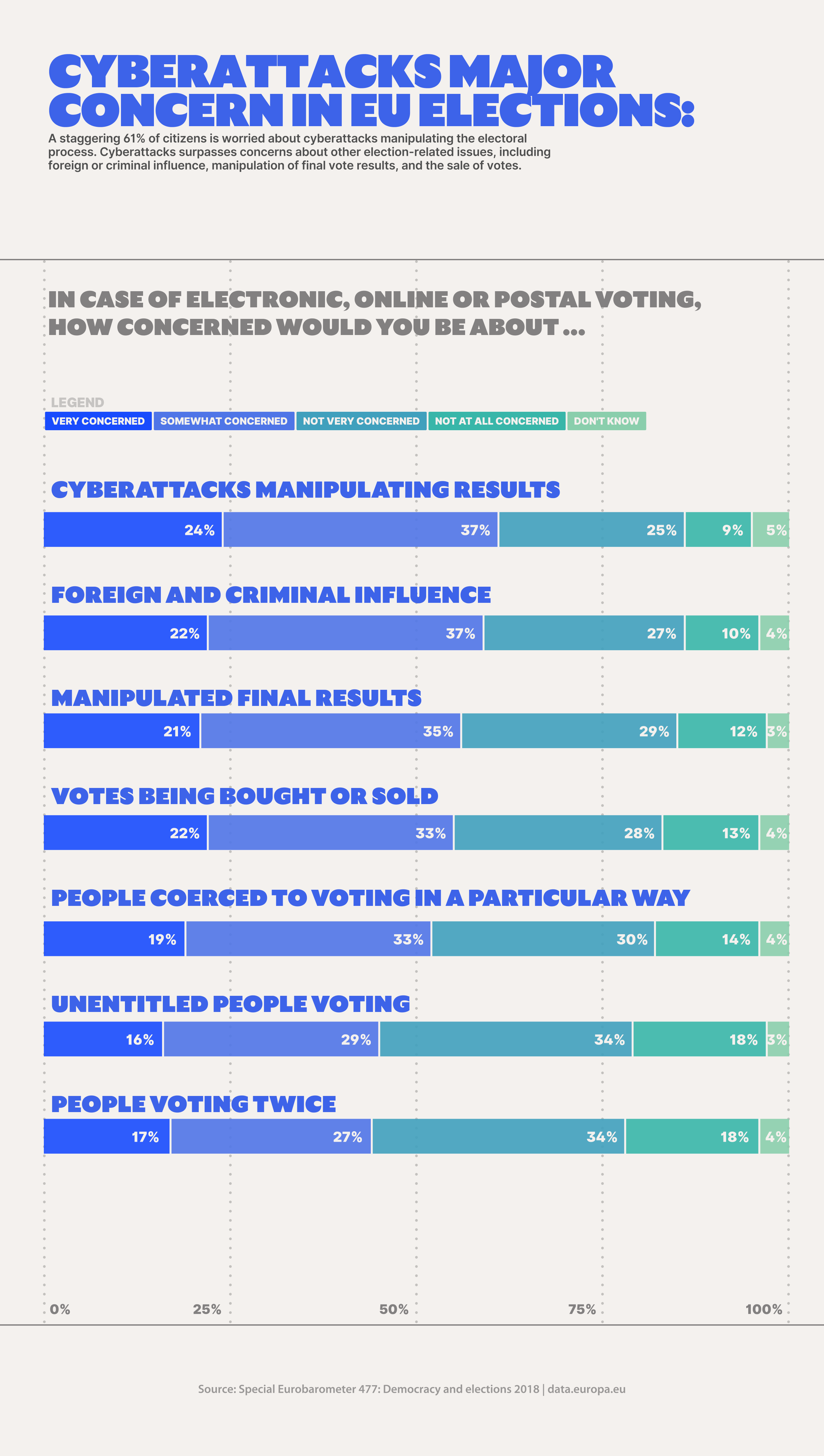

In addition to the reported decline in trust in news media, disinformation poses another societal risk by attempting to interfere in democratic decision-making processes. For instance, disinformation could be spread through social media platforms, forums, websites and blogs to influence political agendas or to trick individuals into believing false stories or rumours about candidates. A Eurobarometer on Democracy and Elections (2018) investigated the concern citizens have about voting and election interference. Survey respondents most often answered they were concerned about elections being manipulated through cyberattacks (61 %). Concern about cyberattacks manipulating elections was higher than concern about foreign actors and criminal groups influencing elections covertly (59 %), the final result of an election being manipulated (56 %) or people coerced to vote in a particular way (55 %) (Figure 2).

Figure 2: The concern of citizens about elections being influenced by different means

Source: Eurobarometer on Democracy and Elections 2018

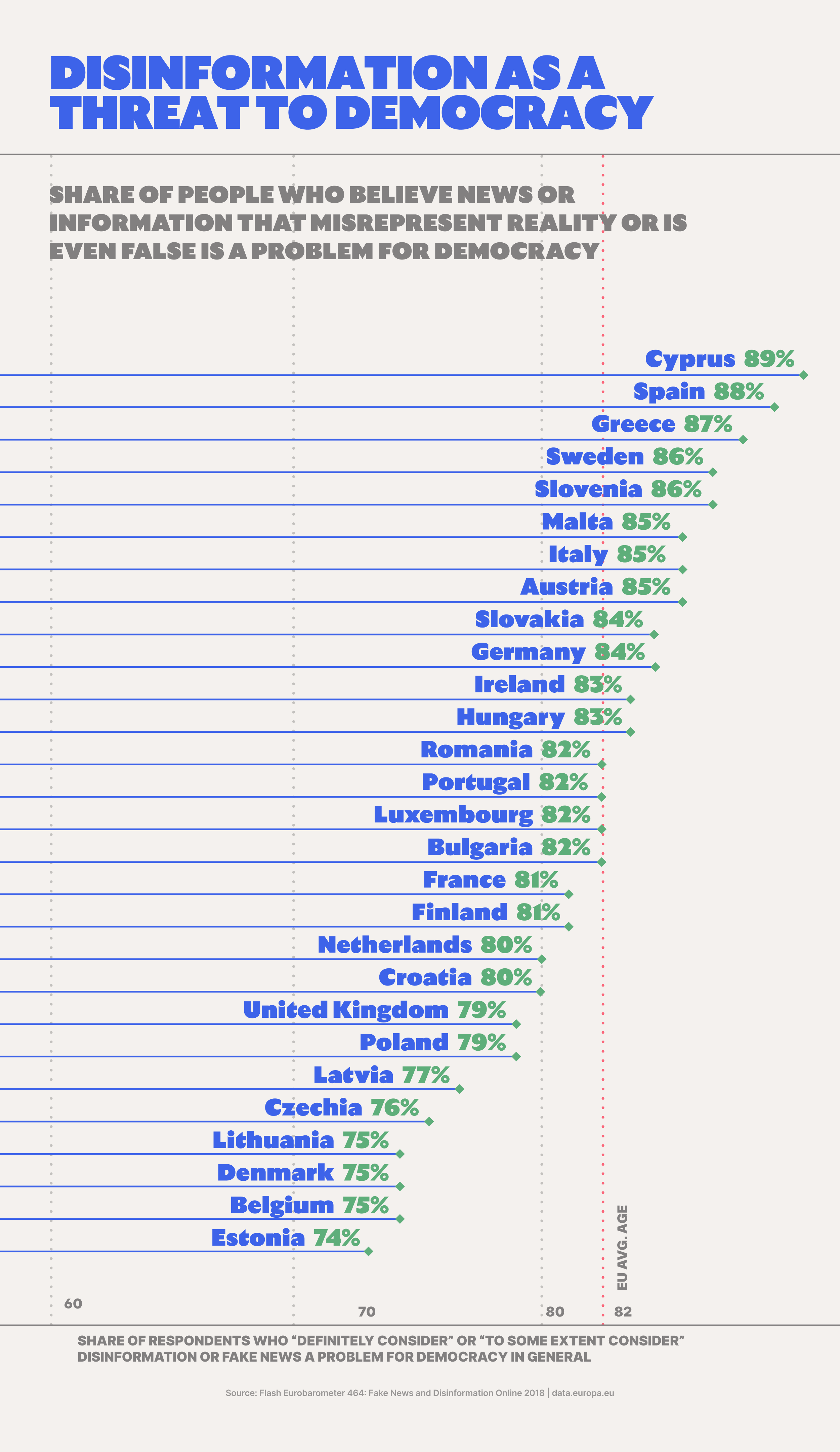

A Eurobarometer on Fake News and Disinformation Online (2018) reinforces this point from another perspective. The majority of respondents (83 %) to this survey agreed that fake news represents a danger to democracy in general (Figure 3). Specifically, the survey showed that 45 % of EU citizens expressed definite levels of concern, while an additional 38 % demonstrated some degree of concern regarding the influence of fake news on democracy in general. Cyprus, Spain, and Greece report above-average values, while Estonia, Belgium, Denmark, and Lithuania register lower percentages, though still notably high at 74–75 %.

Figure 3: The concern of EU citizens that fake news is a problem for democracy in general

Source: Eurobarometer on Fake News and Disinformation Online 2018

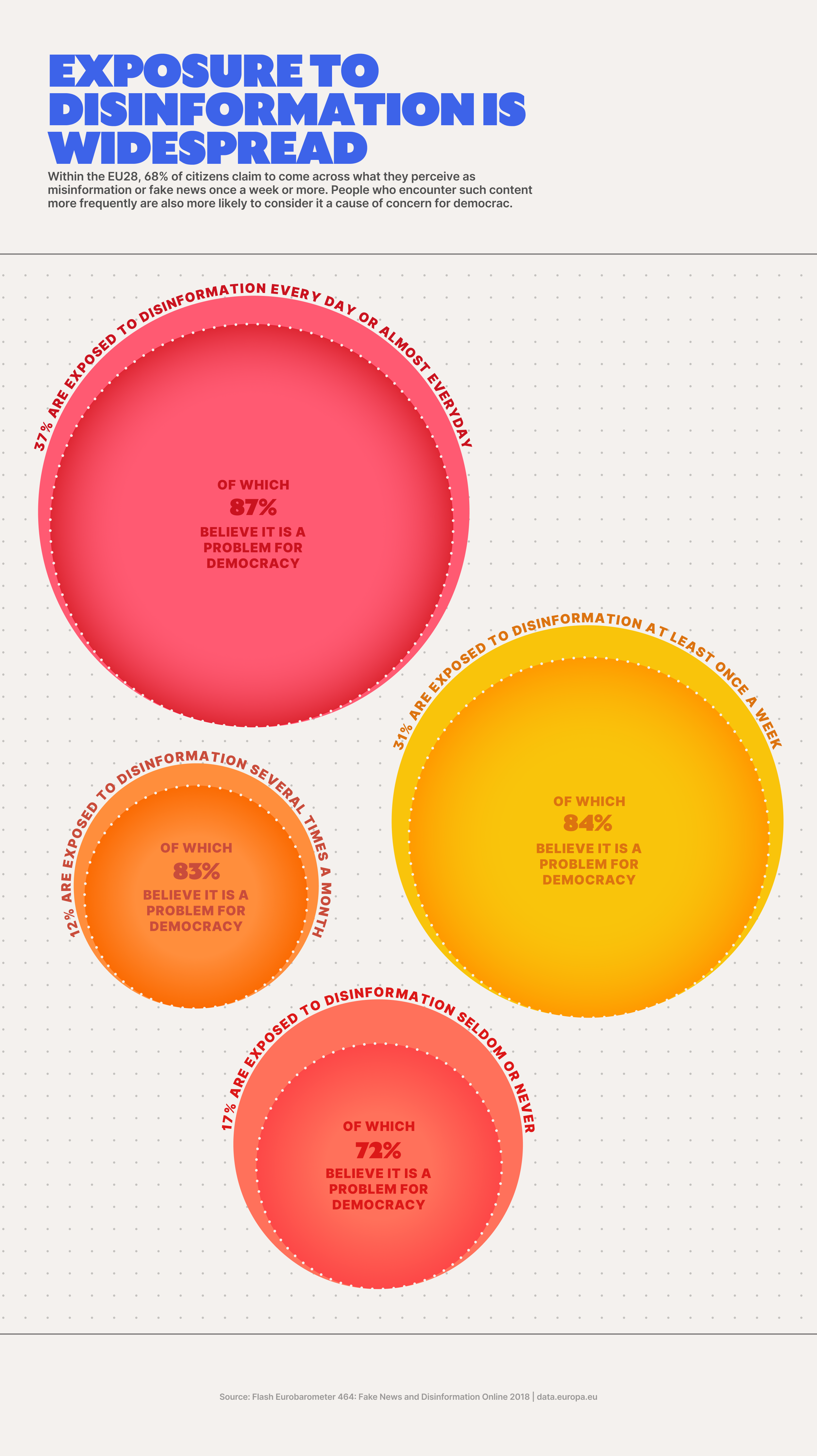

Another insight from the Eurobarometer on Fake News and Disinformation Online (2018) is that respondents who state that they encounter fake news more frequently are more likely to consider it as a problem. Indeed, 68 % of citizens self-reported to come across what they perceive as misinformation or fake news once a week or more (Figure 4). Among those who self-reported to come across fake news every day or almost every day, 88 % see it as a problem for democracy in general. The proportion is lower among respondents who state that they seldom or never encounter fake news (72 %).

Figure 4: Concern about disinformation on democracy in relation to the level of self-reported exposure to fake news

Source: Eurobarometer Fake News and Disinformation Online 2018

AI can facilitate the spread of disinformation

The above discussion provides a sense of the concern citizens have regarding disinformation and the risks it imposes on society. As technology advances, particularly in the area AI, it is important to note that it can be a powerful tool, capable of contributing positively to various aspects of our lives. However, technology like AI can also be misused, enabling the spread of disinformation.

Studies, such as for the European Parliamentary Research Service, have sought to conceptualise how AI can be used to spread inaccurate information. Two widely used concepts are filter bubbles and hallucinations. Firstly, AI algorithms can unintentionally create filter bubbles, wherein individuals are more likely to see content that aligns with what they already believe due to algorithmic bias. When individuals engage with posts containing disinformation, they both consume false content and reinforce the algorithm to show them more content like it. Through repeated exposure to such content, individuals may become more likely to believe disinformation.

Secondly, AI systems, including generative AI can generate information that is incorrect, biased, or inaccurate (called an hallucination). Similar to how people can consume disinformation, so can AI models. If AI models are trained on unreliable internet data, there is a risk of inadvertently creating and disseminating inaccurate information. In contrast to unintentional mistakes, generative AI could be used to deliberately propagate disinformation. This is highlighted by the European Union Agency for Law Enforcement Cooperation who explain that AI's ability to swiftly produce authentic-sounding text at scale presents a risk if used to overwhelm internet sources with targeted disinformation. These emerging trends align with the insights presented in the ENISA Foresight 2030 Threats (2023) booklet, which outlines upcoming cybersecurity challenges.

Open data as a tool against AI-facilitated cyber disinformation

Generative AI can also be used in the effort to fight disinformation and other cyber threats, however. For example, data about disinformation campaigns can be used to train advanced algorithms to forecast scenario and foresee future trends. This information can inform efforts to develop solutions that enhanced cyber defence and resilience.

Furthermore, in efforts to combat the challenge of AI-facilitated cyber disinformation, several EU tools have been developed using open data to provide fact-checking capabilities. For instance, during the COVID-19 pandemic, the Covid Fake News Detector was developed based on open data and allows individuals to check news in German about COVID-19 to determine its reliability. Another more recent use case illustrating the power of open data is the EUvsDisinfo database. EUvsDisinfo is run by the European External Action Service’s East StratCom task force and uses data analysis and media monitoring services in fifteen languages to identify, compile and expose disinformation originating in pro-Kremlin media. The result is a unique searchable, open-source repository which currently stores over 6500 samples of pro-Kremlin disinformation. The task force aims to raise awareness about disinformation and its impact on EU societies and continues to update the database weekly and provide a trend summary.

Open data can also be used to promote media literacy and help citizens to spot disinformation and avoid cyberattacks. The European Digital Media Observatory is an initiative launched by the EU and uses open data sources to gather and analyse information related to disinformation campaigns. The observatory also promotes the sharing of open data to enhance transparency and cooperation among fact-checkers and researchers to counter disinformation. This initiative serves as an example of how making data openly accessible can empower individuals to critically assess the information they view online.

Conclusion

The open data explored in this data story illustrates how disinformation is an important concern for EU citizens. The spread of disinformation can be facilitated through AI technologies, including generative AI, which can be misused to amplify disinformation by reinforcing existing beliefs and generating misleading content. These advanced technologies present new challenges in countering the spread of disinformation. They also present opportunities in the form of innovative solutions to combat disinformation.

Open data helps us understand the problem of cyber disinformation. Indeed, insights from open data reveal that cyber disinformation impacts individuals’ sense of security and can reduce their trust in governmental and other authoritative sources of information. But what is more, open data serves as a part of the solution, empowering individuals, and organisations to leverage existing tools and create new ones to maintain vigilance and verify their sources of information.

Do you have questions about disinformation, fake news, and democracy in the EU? Translate your curiosity into insights with open datasets on data.europa.eu. Also, stay tuned for our next data stories and webinars by subscribing to our newsletter and following data.europa.eu on social media.

Data visualisations by Matteo Moretti and Alice Corona.